Introduction to ML Part 1#

This tutorial is designed to provide a bird’s eye view of the ML packages landscape. The goal is not to give an in-depth explanation of all the features of each packages, but rather demonstrate the purpose of a few widely used ML packages. For more details, we refer the reader to the packages’ documentation and other online tutorials.

You can go through the Jupyter, Numpy and Matplotlib sections before the course starts, and then start studying the next sections after you have completed unit 1.

https://github.com/varal7/ml-tutorial

Jupyter#

Jupyter is not strictly speaking an ML package. It provides a browser front-end connected to an instance of IPython which allows REPL for quick testing, allows to create documents that intertwines code, output, images, and text. This is great for prototyping, demonstrations and tutorials, but terrible for actual coding.

6*7

42

def tokenize(text):

return text.split(" ")

text = "In a shocking finding, scientist discovered a herd of unicorns living in a remote, previously unexplored valley"

print(tokenize(text))

['In', 'a', 'shocking', 'finding,', 'scientist', 'discovered', 'a', 'herd', 'of', 'unicorns', 'living', 'in', 'a', 'remote,', 'previously', 'unexplored', 'valley']

Numpy#

import numpy as np

Numpy is desiged to handle large multidimensional arrays and enable efficient computations with them. In the back, it runs pre-compiled C code which is much faster than, say, a Python for loop

In the Numpy tutorial, we have covered the basics of Numpy, numpy arrays, element-wise operations, matrices operations and generating random matrices.

In this section, we’ll cover indexing, slicing and broadcasting, which are useful concepts that will be reused in Pandas and PyTorch.

Indexing and slicing#

Numpy arrays can be indexed and sliced like regular python arrays

a = [1, 2, 3, 4, 5, 6, 7, 8, 9]

#Number at index 2 is 3

print(a[2])

#['starting index', 'stop at position'] - note the position starts from 1 unline index that starts at 0

print(a[2:4])

# The 3rd aprameter is hops. So this will start at 3, then 2 hops to 5, then 7

print(a[2:7:2])

# if the positino is negative, the positioning would reverse. In this case -1 would mean 2nd last position

print(a[2:-1])

# you can reverse the slice by starting with heigher index and small position

# negative hops would mean reverse direction

print(a[6:1:-2])

3

[3, 4]

[3, 5, 7]

[3, 4, 5, 6, 7, 8]

[7, 5, 3]

a_py = [1, 2, 3, 4, 5, 6, 7, 8, 9]

a_np = np.array(a_py)

print(a_py[3:7:2], a_np[3:7:2])

print(a_py[2:-1:2], a_np[2:-1:2])

print(a_py[::-1], a_np[::-1])

[4, 6] [4 6]

[3, 5, 7] [3 5 7]

[9, 8, 7, 6, 5, 4, 3, 2, 1] [9 8 7 6 5 4 3 2 1]

But you can also use arrays to index other arrays

idx = np.array([7,2])

a_np[idx]

array([8, 3])

# a_py[idx]

Which allows convenient querying, reindexing and even sorting

ages = np.random.randint(low=30, high=60, size=10)

heights = np.random.randint(low=150, high=210, size=10)

print(ages)

print(heights)

[35 57 44 56 57 37 48 32 45 54]

[168 204 188 204 177 186 160 207 186 182]

print(ages < 50)

[ True False True False False True True True True False]

print(heights[ages < 50])

print(ages[ages < 50])

[168 188 186 160 207 186]

[35 44 37 48 32 45]

shuffled_idx = np.random.permutation(10)

print(shuffled_idx)

print(ages[shuffled_idx])

print(heights[shuffled_idx])

[6 1 8 2 7 5 4 3 0 9]

[48 57 45 44 32 37 57 56 35 54]

[160 204 186 188 207 186 177 204 168 182]

sorted_idx = np.argsort(ages)

print(sorted_idx)

print(ages[sorted_idx])

print(heights[sorted_idx])

[7 0 5 2 8 6 9 3 1 4]

[32 35 37 44 45 48 54 56 57 57]

[207 168 186 188 186 160 182 204 204 177]

Broadcasting#

When Numpy is asked to perform an operation between arrays of differents sizes, it “broadcasts” the smaller one to the bigger one.

a = np.array([4, 5, 6])

b = np.array([2, 2, 2])

a * b

array([ 8, 10, 12])

a = np.array([4, 5, 6])

b = 2

a * b

array([ 8, 10, 12])

The two snippets of code above are equivalent but the second is easier to read and also more efficient.

a = np.arange(10).reshape(1,10)

b = np.arange(12).reshape(12,1)

print(a)

print(b)

[[0 1 2 3 4 5 6 7 8 9]]

[[ 0]

[ 1]

[ 2]

[ 3]

[ 4]

[ 5]

[ 6]

[ 7]

[ 8]

[ 9]

[10]

[11]]

print(a * b)

[[ 0 0 0 0 0 0 0 0 0 0]

[ 0 1 2 3 4 5 6 7 8 9]

[ 0 2 4 6 8 10 12 14 16 18]

[ 0 3 6 9 12 15 18 21 24 27]

[ 0 4 8 12 16 20 24 28 32 36]

[ 0 5 10 15 20 25 30 35 40 45]

[ 0 6 12 18 24 30 36 42 48 54]

[ 0 7 14 21 28 35 42 49 56 63]

[ 0 8 16 24 32 40 48 56 64 72]

[ 0 9 18 27 36 45 54 63 72 81]

[ 0 10 20 30 40 50 60 70 80 90]

[ 0 11 22 33 44 55 66 77 88 99]]

Matplotlib#

%matplotlib inline

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = [10, 7]

Matplotlib is the go-to library to produce plots with Python. It comes with two APIs: a MATLAB-like that a lot of people have learned to use and love, and an object-oriented API that we recommend using.

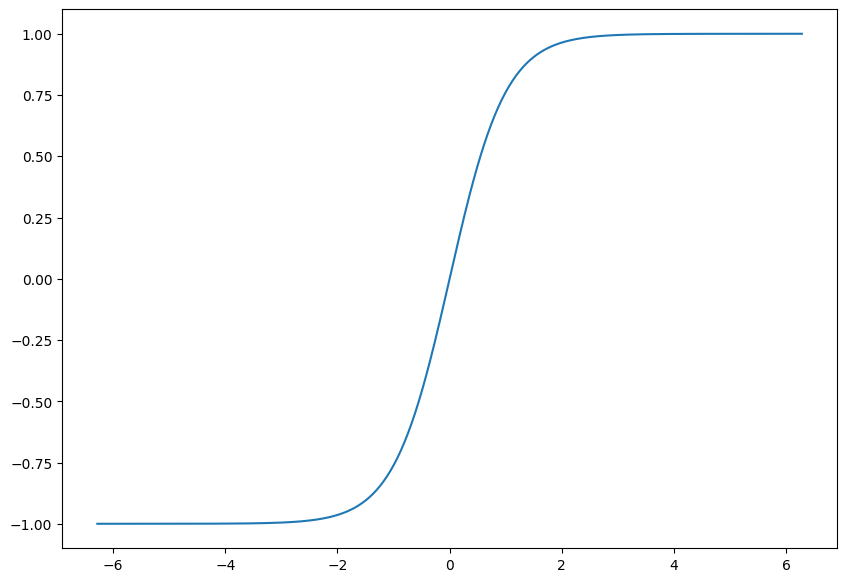

x = np.linspace(-2*np.pi, 2*np.pi, 400)

y = np.tanh(x)

fig, ax = plt.subplots()

ax.plot(x, y)

[<matplotlib.lines.Line2D at 0x11ba23490>]

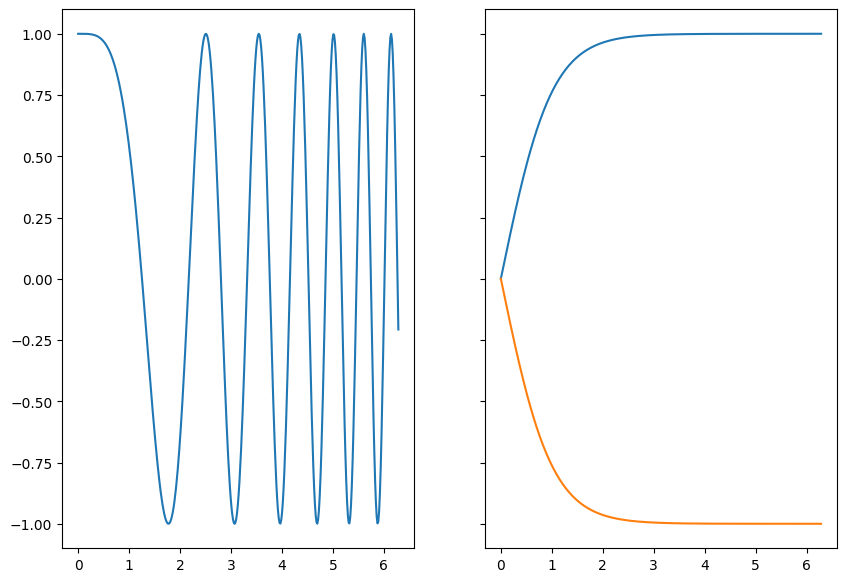

You can plot multiple subplots in the same figure, or multiple functions in the same subplot

x = np.linspace(0, 2*np.pi, 400)

y1 = np.tanh(x)

y2 = np.cos(x**2)

fig, axes = plt.subplots(1, 2, sharey=True)

axes[1].plot(x, y1)

axes[1].plot(x, -y1)

axes[0].plot(x, y2)

[<matplotlib.lines.Line2D at 0x11c515290>]

Matplotlib also comes with a lot of different options to customize, the colors, the labels, the axes, etc.

For instance, see this introduction to matplotlib

Scikit-learn (read this after you have completed unit 1)#

Scikit-learn includes a number of features and utilities to kickstart your journey in Machine Learning.

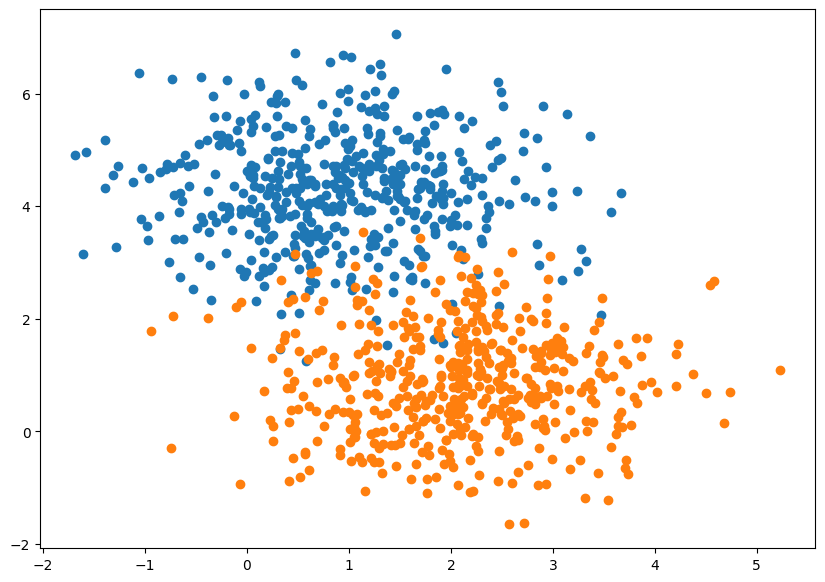

A toy example#

from sklearn.datasets import make_blobs

X, y = make_blobs(n_samples=1000, centers=2, random_state=0)

X[:5], y[:5]

(array([[0.4666179 , 3.86571303],

[2.84382807, 3.32650945],

[0.61121486, 2.51245978],

[3.81653365, 1.65175932],

[1.28097244, 0.62827388]]),

array([0, 0, 0, 1, 1]))

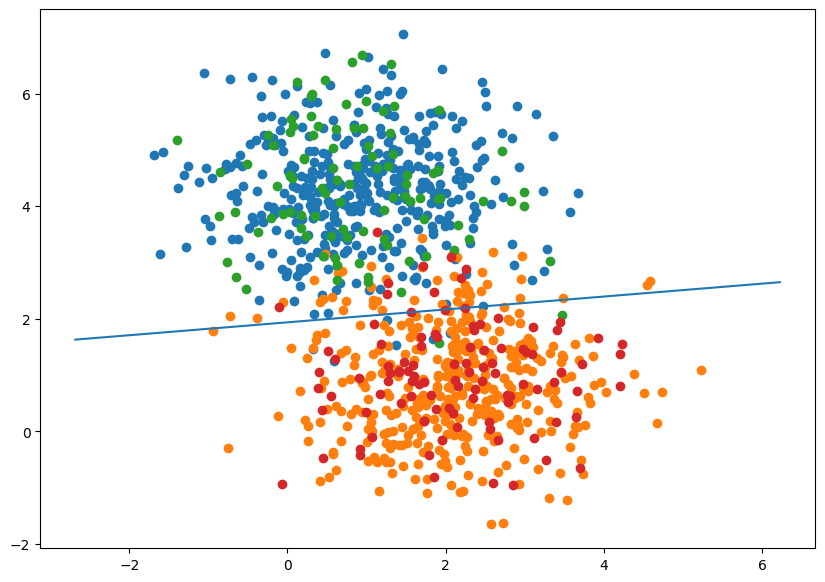

fig, ax = plt.subplots()

for label in [0, 1]:

mask = (y == label)

ax.scatter(X[mask, 0], X[mask, 1])

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

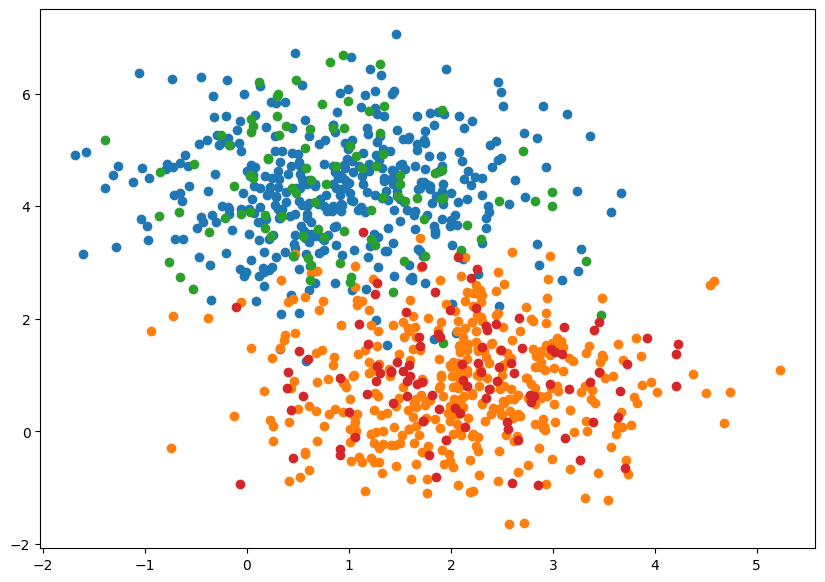

fig, ax = plt.subplots()

for label in [0, 1]:

mask = (y_train == label)

ax.scatter(X_train[mask, 0], X_train[mask, 1])

for label in [0, 1]:

mask = (y_test == label)

ax.scatter(X_test[mask, 0], X_test[mask, 1])

Sklearn uses a uniform and very consistent API, making it easy to switch algorithms

For instance, training and predicting with a perceptron.

from sklearn.linear_model import Perceptron

from sklearn.svm import LinearSVC

from sklearn.metrics import accuracy_score

clf = Perceptron(max_iter=40, random_state=0)

# clf = LinearSVC(max_iter=40, random_state=0)

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

print('Test accuracy: %.4f' % accuracy_score(y_test, y_pred))

Test accuracy: 0.9400

theta = clf.coef_[0]

theta_0 = clf.intercept_

fig, ax = plt.subplots()

for label in [0, 1]:

mask = (y_train == label)

ax.scatter(X_train[mask, 0], X_train[mask, 1])

for label in [0, 1]:

mask = (y_test == label)

ax.scatter(X_test[mask, 0], X_test[mask, 1])

x_bnd = np.linspace(X[:, 0].min() - 1, X[:, 0].max() + 1, 400)

y_bnd = - x_bnd * (theta[0] /theta[1]) - (theta_0 / theta[1])

ax.plot(x_bnd, y_bnd)

[<matplotlib.lines.Line2D at 0x13c6cd550>]

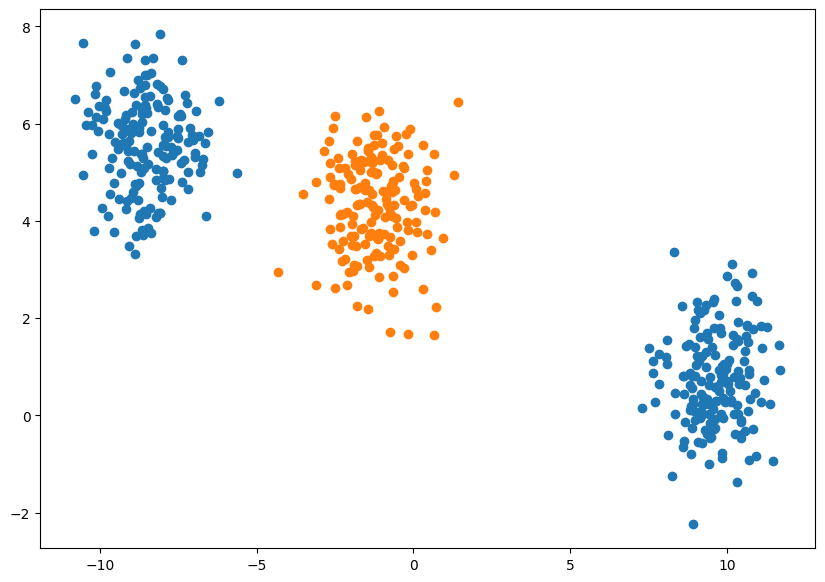

Another toy example#

X, y = make_blobs(n_samples=500, centers=3, random_state=7)

y[y==2] = 0

fig, ax = plt.subplots()

for label in [0, 1]:

mask = (y == label)

ax.scatter(X[mask, 0], X[mask, 1])

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

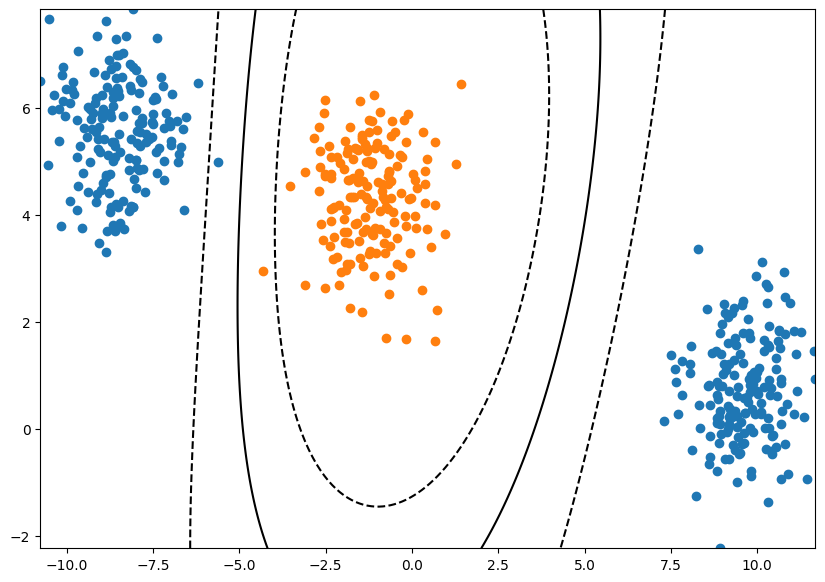

from sklearn.svm import SVC

# clf = SVC(kernel="linear", random_state=0)

clf = SVC(kernel="rbf", random_state=0)

clf.fit(X_train, y_train)

SVC(random_state=0)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

SVC(random_state=0)

y_pred = clf.predict(X_test)

print('Test accuracy: %.4f' % accuracy_score(y_test, y_pred))

Test accuracy: 1.0000

x_min = X[:, 0].min()

x_max = X[:, 0].max()

y_min = X[:, 1].min()

y_max = X[:, 1].max()

XX, YY = np.mgrid[x_min:x_max:200j, y_min:y_max:200j]

Z = clf.decision_function(np.c_[XX.ravel(), YY.ravel()])

fig, ax = plt.subplots()

for label in [0, 1]:

mask = (y == label)

ax.scatter(X[mask, 0], X[mask, 1])

Z = Z.reshape(XX.shape)

ax.contour(XX, YY, Z, colors="black",

linestyles=['--', '-', '--'], levels=[-.5, 0, .5])

<matplotlib.contour.QuadContourSet at 0x13ce4b590>

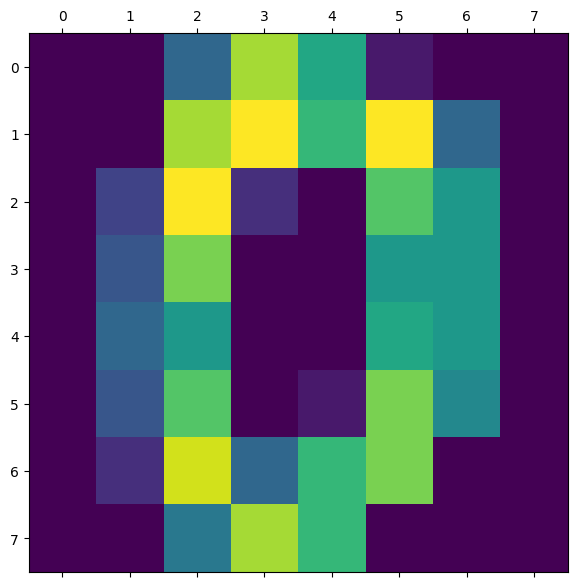

Classify digits#

# from sklearn.datasets import load_breast_cancer

# breast_cancer = load_breast_cancer()

# X, y = breast_cancer.data, breast_cancer.target

# X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)

from sklearn.datasets import load_digits

digits = load_digits()

X, y = digits.data, digits.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)

fig, ax = plt.subplots()

ax.matshow(digits.images[0])

<matplotlib.image.AxesImage at 0x13d045d90>

X_train.shape

(1437, 64)

clf = Perceptron(max_iter=40, random_state=0)

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

print('Accuracy: %.4f' % accuracy_score(y_test, y_pred))

Accuracy: 0.9389

clf = LinearSVC(C=1, random_state=0)

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

print('Accuracy: %.4f' % accuracy_score(y_test, y_pred))

Accuracy: 0.9333

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

from sklearn.metrics import confusion_matrix

confusion_matrix(y_test, clf.predict(X_test))

array([[27, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[ 0, 32, 0, 0, 0, 0, 1, 0, 1, 1],

[ 0, 1, 33, 2, 0, 0, 0, 0, 0, 0],

[ 0, 0, 0, 29, 0, 0, 0, 0, 0, 0],

[ 0, 0, 0, 0, 30, 0, 0, 0, 0, 0],

[ 0, 0, 0, 0, 0, 38, 1, 0, 0, 1],

[ 0, 1, 0, 0, 0, 0, 43, 0, 0, 0],

[ 0, 1, 0, 0, 1, 0, 0, 37, 0, 0],

[ 0, 3, 1, 1, 0, 0, 2, 0, 29, 3],

[ 0, 0, 0, 2, 0, 1, 0, 0, 0, 38]])

Scikit-learn also includes utilities to quickly compute a cross validation score…

clf = LinearSVC(C=1, random_state=0)

from sklearn.model_selection import cross_val_score

scores = cross_val_score(clf, X_train, y_train, cv=5)

print("Mean: %.4f, Std: %.4f" % (np.mean(scores), np.std(scores)))

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

Mean: 0.9436, Std: 0.0158

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

clf = LinearSVC(C=0.1, random_state=0)

scores = cross_val_score(clf, X_train, y_train, cv=5)

print("Mean: %.4f, Std: %.4f" % (np.mean(scores), np.std(scores)))

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

Mean: 0.9534, Std: 0.0065

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

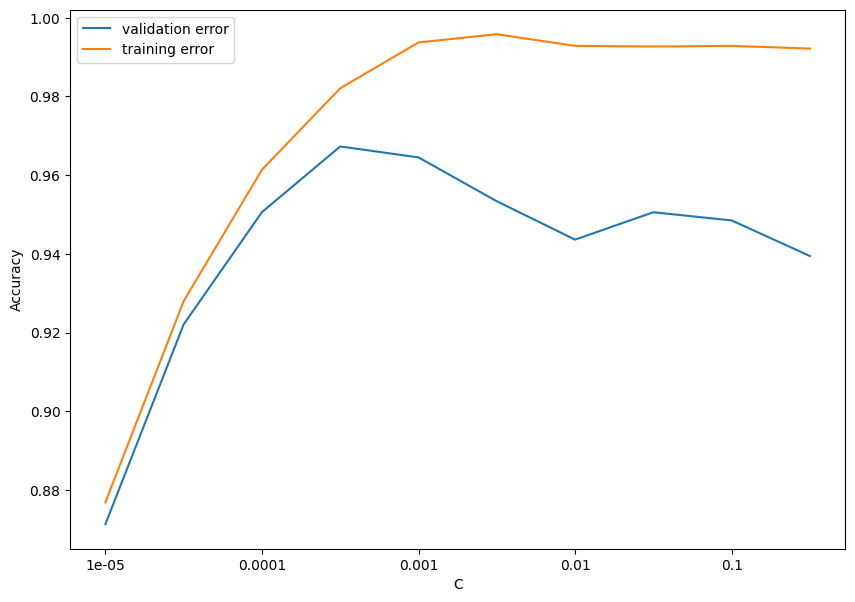

… or to perform a grid search

from sklearn.model_selection import GridSearchCV

clf = LinearSVC(random_state=0)

param_grid = {'C': 10. ** np.arange(-6, 4)}

grid_search = GridSearchCV(clf, param_grid=param_grid, cv=5, verbose=3, return_train_score=True)

grid_search.fit(X_train, y_train);

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

Fitting 5 folds for each of 10 candidates, totalling 50 fits

[CV 1/5] END .......C=1e-06;, score=(train=0.883, test=0.844) total time= 0.0s

[CV 2/5] END .......C=1e-06;, score=(train=0.880, test=0.865) total time= 0.0s

[CV 3/5] END .......C=1e-06;, score=(train=0.869, test=0.909) total time= 0.0s

[CV 4/5] END .......C=1e-06;, score=(train=0.880, test=0.840) total time= 0.0s

[CV 5/5] END .......C=1e-06;, score=(train=0.873, test=0.899) total time= 0.0s

[CV 1/5] END .......C=1e-05;, score=(train=0.929, test=0.906) total time= 0.0s

[CV 2/5] END .......C=1e-05;, score=(train=0.930, test=0.917) total time= 0.0s

[CV 3/5] END .......C=1e-05;, score=(train=0.923, test=0.944) total time= 0.0s

[CV 4/5] END .......C=1e-05;, score=(train=0.933, test=0.906) total time= 0.0s

[CV 5/5] END .......C=1e-05;, score=(train=0.925, test=0.937) total time= 0.0s

[CV 1/5] END ......C=0.0001;, score=(train=0.963, test=0.938) total time= 0.0s

[CV 2/5] END ......C=0.0001;, score=(train=0.964, test=0.941) total time= 0.0s

[CV 3/5] END ......C=0.0001;, score=(train=0.960, test=0.969) total time= 0.0s

[CV 4/5] END ......C=0.0001;, score=(train=0.962, test=0.937) total time= 0.0s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 5/5] END ......C=0.0001;, score=(train=0.957, test=0.969) total time= 0.0s

[CV 1/5] END .......C=0.001;, score=(train=0.983, test=0.962) total time= 0.0s

[CV 2/5] END .......C=0.001;, score=(train=0.983, test=0.965) total time= 0.0s

[CV 3/5] END .......C=0.001;, score=(train=0.983, test=0.969) total time= 0.0s

[CV 4/5] END .......C=0.001;, score=(train=0.981, test=0.962) total time= 0.0s

[CV 5/5] END .......C=0.001;, score=(train=0.982, test=0.979) total time= 0.0s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 1/5] END ........C=0.01;, score=(train=0.992, test=0.958) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 2/5] END ........C=0.01;, score=(train=0.994, test=0.962) total time= 0.1s

[CV 3/5] END ........C=0.01;, score=(train=0.996, test=0.969) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 4/5] END ........C=0.01;, score=(train=0.993, test=0.962) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 5/5] END ........C=0.01;, score=(train=0.994, test=0.972) total time= 0.1s

[CV 1/5] END .........C=0.1;, score=(train=0.997, test=0.948) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 2/5] END .........C=0.1;, score=(train=0.996, test=0.965) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 3/5] END .........C=0.1;, score=(train=0.998, test=0.948) total time= 0.1s

[CV 4/5] END .........C=0.1;, score=(train=0.992, test=0.955) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 5/5] END .........C=0.1;, score=(train=0.996, test=0.951) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 1/5] END .........C=1.0;, score=(train=0.993, test=0.938) total time= 0.1s

[CV 2/5] END .........C=1.0;, score=(train=0.994, test=0.969) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 3/5] END .........C=1.0;, score=(train=0.989, test=0.920) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 4/5] END .........C=1.0;, score=(train=0.994, test=0.948) total time= 0.1s

[CV 5/5] END .........C=1.0;, score=(train=0.995, test=0.944) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 1/5] END ........C=10.0;, score=(train=0.998, test=0.944) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 2/5] END ........C=10.0;, score=(train=0.997, test=0.972) total time= 0.1s

[CV 3/5] END ........C=10.0;, score=(train=0.997, test=0.941) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 4/5] END ........C=10.0;, score=(train=0.977, test=0.951) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 5/5] END ........C=10.0;, score=(train=0.994, test=0.944) total time= 0.1s

[CV 1/5] END .......C=100.0;, score=(train=0.993, test=0.941) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 2/5] END .......C=100.0;, score=(train=0.992, test=0.965) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 3/5] END .......C=100.0;, score=(train=0.997, test=0.937) total time= 0.1s

[CV 4/5] END .......C=100.0;, score=(train=0.990, test=0.951) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 5/5] END .......C=100.0;, score=(train=0.993, test=0.948) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 1/5] END ......C=1000.0;, score=(train=0.988, test=0.931) total time= 0.1s

[CV 2/5] END ......C=1000.0;, score=(train=0.989, test=0.955) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 3/5] END ......C=1000.0;, score=(train=0.994, test=0.916) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

[CV 4/5] END ......C=1000.0;, score=(train=0.994, test=0.944) total time= 0.1s

[CV 5/5] END ......C=1000.0;, score=(train=0.997, test=0.951) total time= 0.1s

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_base.py:1250: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

warnings.warn(

/Users/n03an/.pyenv/versions/3.11.5/lib/python3.11/site-packages/sklearn/svm/_classes.py:32: FutureWarning: The default value of `dual` will change from `True` to `'auto'` in 1.5. Set the value of `dual` explicitly to suppress the warning.

warnings.warn(

print(grid_search.best_params_)

{'C': 0.001}

print(grid_search.best_score_)

0.9672981997677119

y_pred = grid_search.predict(X_test)

print('Accuracy: %.4f' % accuracy_score(y_test, y_pred))

Accuracy: 0.9639

And a lot more features! We have only looked at some classification models and some model selection features, but sklearn can also be used for regression,

Pandas#

import pandas as pd

Pandas is a library that provides a set of tools for data analysis (Python Data Analysis Library).

Pandas dataframes can be created by importing a CSV file (or TSV, or JSON, or SQL, etc.)

# df = pd.read_csv("file.csv")

Pandas dataframes can also be created directly from a dictionary of arrays.

print(grid_search.cv_results_)

{'mean_fit_time': array([0.012708 , 0.01136432, 0.01144166, 0.02166719, 0.07240119,

0.06853347, 0.06847544, 0.06964402, 0.07199402, 0.07125392]), 'std_fit_time': array([0.00119576, 0.00028361, 0.00069896, 0.00088312, 0.0011855 ,

0.00112866, 0.00268612, 0.00313414, 0.00544413, 0.00527056]), 'mean_score_time': array([0.00078311, 0.00059404, 0.00057449, 0.00061421, 0.00077777,

0.00080886, 0.00088906, 0.00090394, 0.00081658, 0.00091605]), 'std_score_time': array([2.96987588e-04, 9.94822884e-05, 3.36971073e-05, 2.81335540e-05,

6.25741177e-05, 5.08916395e-05, 2.31639276e-04, 1.11696659e-04,

4.55138507e-05, 1.53209449e-04]), 'param_C': masked_array(data=[1e-06, 1e-05, 0.0001, 0.001, 0.01, 0.1, 1.0, 10.0,

100.0, 1000.0],

mask=[False, False, False, False, False, False, False, False,

False, False],

fill_value='?',

dtype=object), 'params': [{'C': 1e-06}, {'C': 1e-05}, {'C': 0.0001}, {'C': 0.001}, {'C': 0.01}, {'C': 0.1}, {'C': 1.0}, {'C': 10.0}, {'C': 100.0}, {'C': 1000.0}], 'split0_test_score': array([0.84375 , 0.90625 , 0.9375 , 0.96180556, 0.95833333,

0.94791667, 0.9375 , 0.94444444, 0.94097222, 0.93055556]), 'split1_test_score': array([0.86458333, 0.91666667, 0.94097222, 0.96527778, 0.96180556,

0.96527778, 0.96875 , 0.97222222, 0.96527778, 0.95486111]), 'split2_test_score': array([0.90940767, 0.94425087, 0.96864111, 0.96864111, 0.96864111,

0.94773519, 0.91986063, 0.94076655, 0.93728223, 0.91637631]), 'split3_test_score': array([0.83972125, 0.90592334, 0.93728223, 0.96167247, 0.96167247,

0.95470383, 0.94773519, 0.95121951, 0.95121951, 0.94425087]), 'split4_test_score': array([0.8989547 , 0.93728223, 0.96864111, 0.97909408, 0.97212544,

0.95121951, 0.94425087, 0.94425087, 0.94773519, 0.95121951]), 'mean_test_score': array([0.87128339, 0.92207462, 0.95060734, 0.9672982 , 0.96451558,

0.9533706 , 0.94361934, 0.95058072, 0.94849739, 0.93945267]), 'std_test_score': array([0.02834893, 0.01589708, 0.01478263, 0.00643189, 0.00507105,

0.00647594, 0.01581652, 0.01133844, 0.00971576, 0.01421674]), 'rank_test_score': array([10, 9, 4, 1, 2, 3, 7, 5, 6, 8], dtype=int32), 'split0_train_score': array([0.88250653, 0.92863359, 0.96344648, 0.98259356, 0.9921671 ,

0.99738903, 0.99303742, 0.99825936, 0.99303742, 0.98781549]), 'split1_train_score': array([0.87989556, 0.93037424, 0.9643168 , 0.98259356, 0.99390775,

0.99564839, 0.99390775, 0.99651871, 0.9921671 , 0.98868581]), 'split2_train_score': array([0.86869565, 0.9226087 , 0.96 , 0.9826087 , 0.99565217,

0.99826087, 0.98869565, 0.9973913 , 0.99652174, 0.99391304]), 'split3_train_score': array([0.88 , 0.93304348, 0.96173913, 0.98086957, 0.99304348,

0.99217391, 0.99391304, 0.9773913 , 0.98956522, 0.99391304]), 'split4_train_score': array([0.87304348, 0.92521739, 0.9573913 , 0.98173913, 0.99391304,

0.99565217, 0.99478261, 0.99391304, 0.99304348, 0.99652174]), 'mean_train_score': array([0.87682824, 0.92797548, 0.96137874, 0.9820809 , 0.99373671,

0.99582488, 0.99286729, 0.99269474, 0.99286699, 0.99216983]), 'std_train_score': array([0.0051415 , 0.00369543, 0.00248347, 0.00069114, 0.0011553 ,

0.00208668, 0.0021576 , 0.00778877, 0.00222751, 0.00335008])}

df = pd.DataFrame(grid_search.cv_results_)

df

| mean_fit_time | std_fit_time | mean_score_time | std_score_time | param_C | params | split0_test_score | split1_test_score | split2_test_score | split3_test_score | ... | mean_test_score | std_test_score | rank_test_score | split0_train_score | split1_train_score | split2_train_score | split3_train_score | split4_train_score | mean_train_score | std_train_score | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.012708 | 0.001196 | 0.000783 | 0.000297 | 0.000001 | {'C': 1e-06} | 0.843750 | 0.864583 | 0.909408 | 0.839721 | ... | 0.871283 | 0.028349 | 10 | 0.882507 | 0.879896 | 0.868696 | 0.880000 | 0.873043 | 0.876828 | 0.005141 |

| 1 | 0.011364 | 0.000284 | 0.000594 | 0.000099 | 0.00001 | {'C': 1e-05} | 0.906250 | 0.916667 | 0.944251 | 0.905923 | ... | 0.922075 | 0.015897 | 9 | 0.928634 | 0.930374 | 0.922609 | 0.933043 | 0.925217 | 0.927975 | 0.003695 |

| 2 | 0.011442 | 0.000699 | 0.000574 | 0.000034 | 0.0001 | {'C': 0.0001} | 0.937500 | 0.940972 | 0.968641 | 0.937282 | ... | 0.950607 | 0.014783 | 4 | 0.963446 | 0.964317 | 0.960000 | 0.961739 | 0.957391 | 0.961379 | 0.002483 |

| 3 | 0.021667 | 0.000883 | 0.000614 | 0.000028 | 0.001 | {'C': 0.001} | 0.961806 | 0.965278 | 0.968641 | 0.961672 | ... | 0.967298 | 0.006432 | 1 | 0.982594 | 0.982594 | 0.982609 | 0.980870 | 0.981739 | 0.982081 | 0.000691 |

| 4 | 0.072401 | 0.001186 | 0.000778 | 0.000063 | 0.01 | {'C': 0.01} | 0.958333 | 0.961806 | 0.968641 | 0.961672 | ... | 0.964516 | 0.005071 | 2 | 0.992167 | 0.993908 | 0.995652 | 0.993043 | 0.993913 | 0.993737 | 0.001155 |

| 5 | 0.068533 | 0.001129 | 0.000809 | 0.000051 | 0.1 | {'C': 0.1} | 0.947917 | 0.965278 | 0.947735 | 0.954704 | ... | 0.953371 | 0.006476 | 3 | 0.997389 | 0.995648 | 0.998261 | 0.992174 | 0.995652 | 0.995825 | 0.002087 |

| 6 | 0.068475 | 0.002686 | 0.000889 | 0.000232 | 1.0 | {'C': 1.0} | 0.937500 | 0.968750 | 0.919861 | 0.947735 | ... | 0.943619 | 0.015817 | 7 | 0.993037 | 0.993908 | 0.988696 | 0.993913 | 0.994783 | 0.992867 | 0.002158 |

| 7 | 0.069644 | 0.003134 | 0.000904 | 0.000112 | 10.0 | {'C': 10.0} | 0.944444 | 0.972222 | 0.940767 | 0.951220 | ... | 0.950581 | 0.011338 | 5 | 0.998259 | 0.996519 | 0.997391 | 0.977391 | 0.993913 | 0.992695 | 0.007789 |

| 8 | 0.071994 | 0.005444 | 0.000817 | 0.000046 | 100.0 | {'C': 100.0} | 0.940972 | 0.965278 | 0.937282 | 0.951220 | ... | 0.948497 | 0.009716 | 6 | 0.993037 | 0.992167 | 0.996522 | 0.989565 | 0.993043 | 0.992867 | 0.002228 |

| 9 | 0.071254 | 0.005271 | 0.000916 | 0.000153 | 1000.0 | {'C': 1000.0} | 0.930556 | 0.954861 | 0.916376 | 0.944251 | ... | 0.939453 | 0.014217 | 8 | 0.987815 | 0.988686 | 0.993913 | 0.993913 | 0.996522 | 0.992170 | 0.003350 |

10 rows × 21 columns

Pandas columns are also Numpy arrays, so they obey to the same indexing magic

df[df['param_C'] < 0.01]

| mean_fit_time | std_fit_time | mean_score_time | std_score_time | param_C | params | split0_test_score | split1_test_score | split2_test_score | split3_test_score | ... | mean_test_score | std_test_score | rank_test_score | split0_train_score | split1_train_score | split2_train_score | split3_train_score | split4_train_score | mean_train_score | std_train_score | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.012708 | 0.001196 | 0.000783 | 0.000297 | 0.000001 | {'C': 1e-06} | 0.843750 | 0.864583 | 0.909408 | 0.839721 | ... | 0.871283 | 0.028349 | 10 | 0.882507 | 0.879896 | 0.868696 | 0.880000 | 0.873043 | 0.876828 | 0.005141 |

| 1 | 0.011364 | 0.000284 | 0.000594 | 0.000099 | 0.00001 | {'C': 1e-05} | 0.906250 | 0.916667 | 0.944251 | 0.905923 | ... | 0.922075 | 0.015897 | 9 | 0.928634 | 0.930374 | 0.922609 | 0.933043 | 0.925217 | 0.927975 | 0.003695 |

| 2 | 0.011442 | 0.000699 | 0.000574 | 0.000034 | 0.0001 | {'C': 0.0001} | 0.937500 | 0.940972 | 0.968641 | 0.937282 | ... | 0.950607 | 0.014783 | 4 | 0.963446 | 0.964317 | 0.960000 | 0.961739 | 0.957391 | 0.961379 | 0.002483 |

| 3 | 0.021667 | 0.000883 | 0.000614 | 0.000028 | 0.001 | {'C': 0.001} | 0.961806 | 0.965278 | 0.968641 | 0.961672 | ... | 0.967298 | 0.006432 | 1 | 0.982594 | 0.982594 | 0.982609 | 0.980870 | 0.981739 | 0.982081 | 0.000691 |

4 rows × 21 columns

They also provide most functionality you would expect as database user (df.sort_values, df.groupby, df.join, df.concat, etc.)

fig, ax = plt.subplots()

ax.plot(df['mean_test_score'], label="validation error")

ax.plot(df['mean_train_score'], label="training error")

ax.set_xticklabels(df['param_C'])

ax.set_xlabel("C")

ax.set_ylabel("Accuracy")

ax.legend(loc='best');

/var/folders/1_/swvbhq057ls2d5l5wv0zs_qr0000gn/T/ipykernel_34530/3467822884.py:4: UserWarning: set_ticklabels() should only be used with a fixed number of ticks, i.e. after set_ticks() or using a FixedLocator.

ax.set_xticklabels(df['param_C'])

Other packages#

Other packages that didn’t make the cut:

Scipy: a science library built on top of Numpy

Scrapy: a web crawling library

pdb: a debugger for python (not ML-specific but terribly useful)

tqdm: a progress bar (not ML-specific)

Next time: